Thousand Brains Principles

We have identified several core neocortical principles that are guiding our software architecture design. These principles are fundamentally different from those used by current AI systems. We believe that by adopting these principles, future AI systems will mitigate many of the problems that plague today’s AI such as the need for huge datasets, long training, high energy consumption, brittleness, inability to learn continuously, lack of explicit reasoning and ability to extrapolate, bias, and lack of interpretability.

In addition, future AI systems built on the Thousand Brains principles will enable applications that cannot even be attempted with today’s AI.

Sensorimotor Learning

Learning in every intelligent species known is a fundamentally sensorimotor process. Neuroscience teaches us that every part of the neocortex is involved in processing movement signals and sends outputs to subcortical motor areas. We therefore believe that the future of AI lies in sensorimotor learning and models designed to deal with such settings.

Most AI systems today learn from huge, static, datasets, with major implications. First, this process is costly given the need to create and label these datasets. Second, these systems are unable to learn continuously from new information without retraining on the entire dataset. A system based on the Thousand Brains Theory will be able to ingest a continuous stream of (self-generated) sensory and motor information like a child experiences when playing with a new toy, exploring it with all her senses, and building a model of the object. The system actively interacts with the environment to obtain the information it needs to learn or to perform a certain task. Active learning makes the system efficient and allows it to quickly adapt to new inputs.

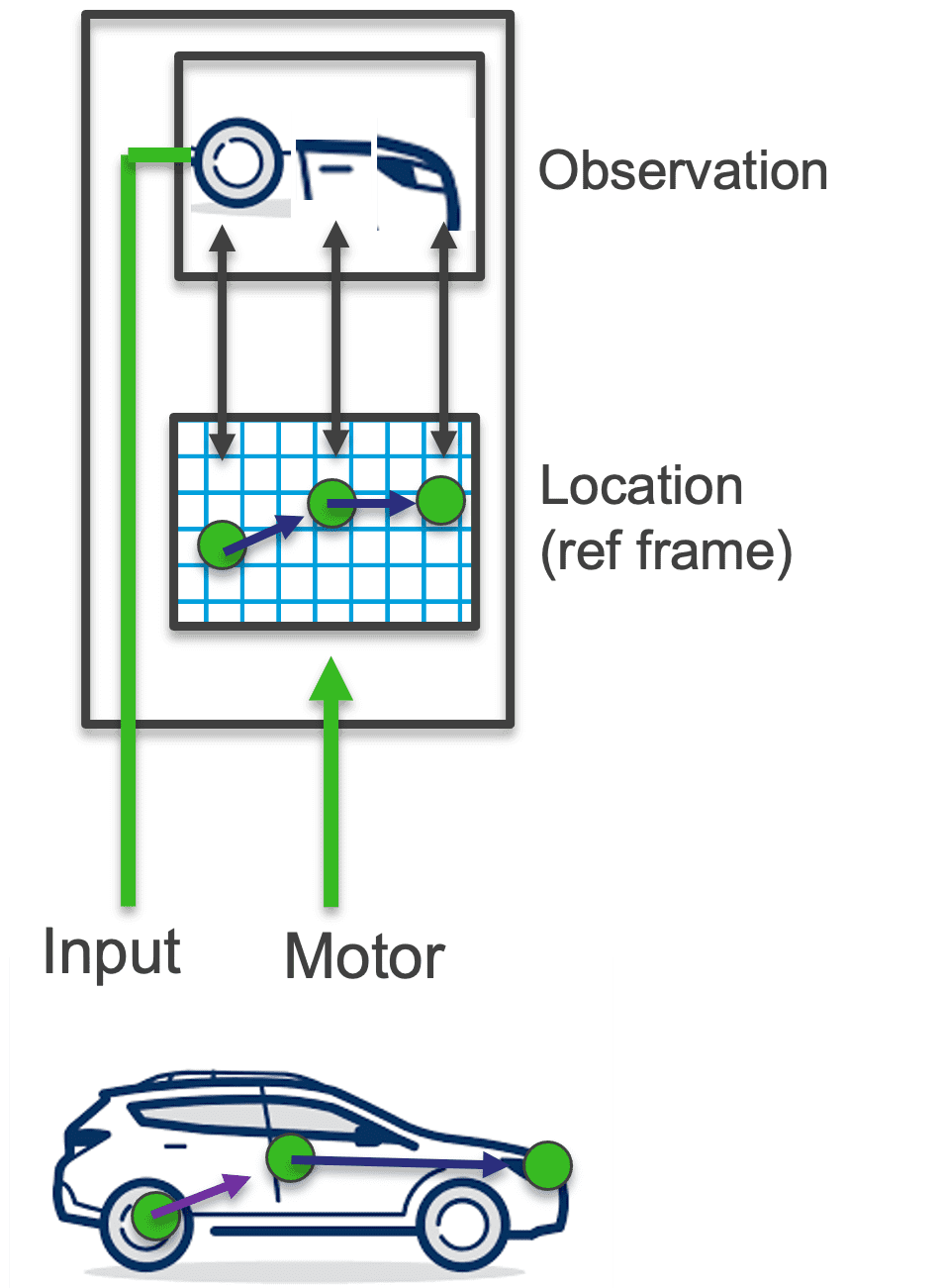

Reference Frames

The brain evolved efficient mechanisms, such as grid and place cells, to understand and store the constant stream of sensorimotor inputs. Representations that are learned by the brain are structured and make it possible to perform necessary tasks for survival quickly, such as path integration and planning.

While some existing AI systems are trained in sensorimotor settings (such as in reinforcement learning), the vast majority use the same modeling principles (like ANNs) as in supervised settings, bringing with it the same problems. In contrast, our models are specifically designed to learn from sensorimotor data and to build up structured representations. Those structured models allow for quick and sample-efficient learning and generalization. They also make the system more robust, give it the ability to reason, and enable easy interaction with environments and novel situations.

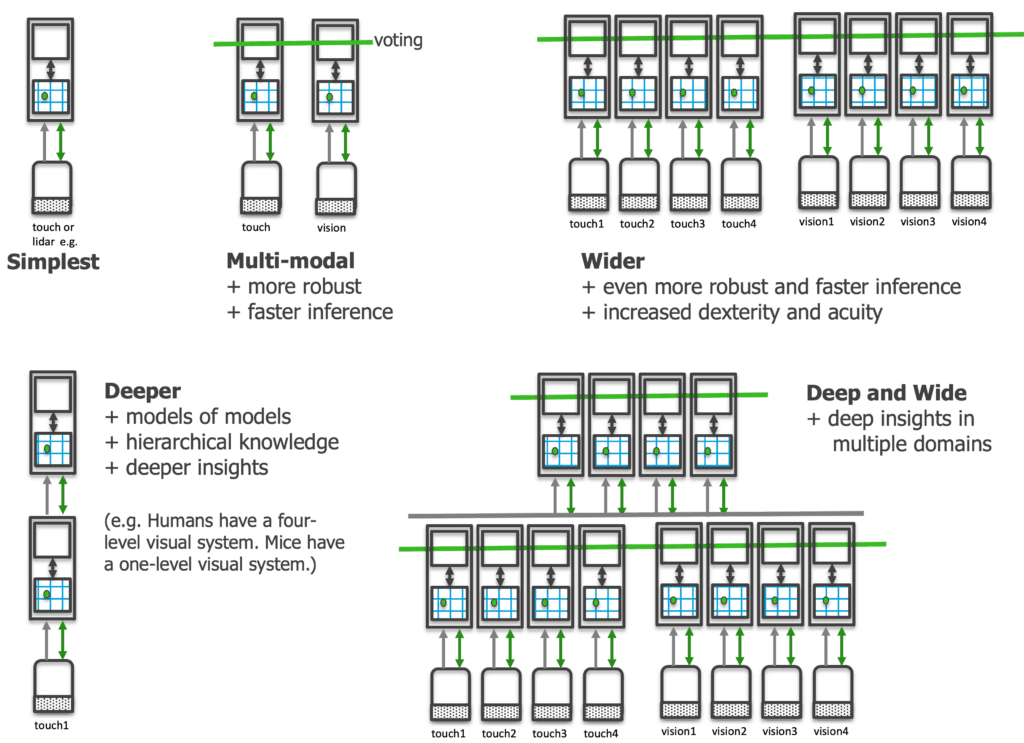

Modularity (A Thousand Brains)

The neocortex is made up of thousands of cortical columns. Each of these columns is a sensorimotor modeling system on its own with a complex and intricate structure. Neuroscientists talk about 6 different layers which are often divided into more sub-layers the closer you look. Over the past years we have studied the structure of cortical columns and the connections within and between them in detail.

In our implementation, we have cortical column-like units that can each learn complete objects from sensorimotor data. They communicate with each other using a common communication protocol. Inspired by long-range connections in the neocortex, they can communicate with each other laterally to reach a quick consensus and can also be arranged hierarchically to model compositional objects. The common communication protocol makes the system modular and allows for easy cross-modal communication and scalability.

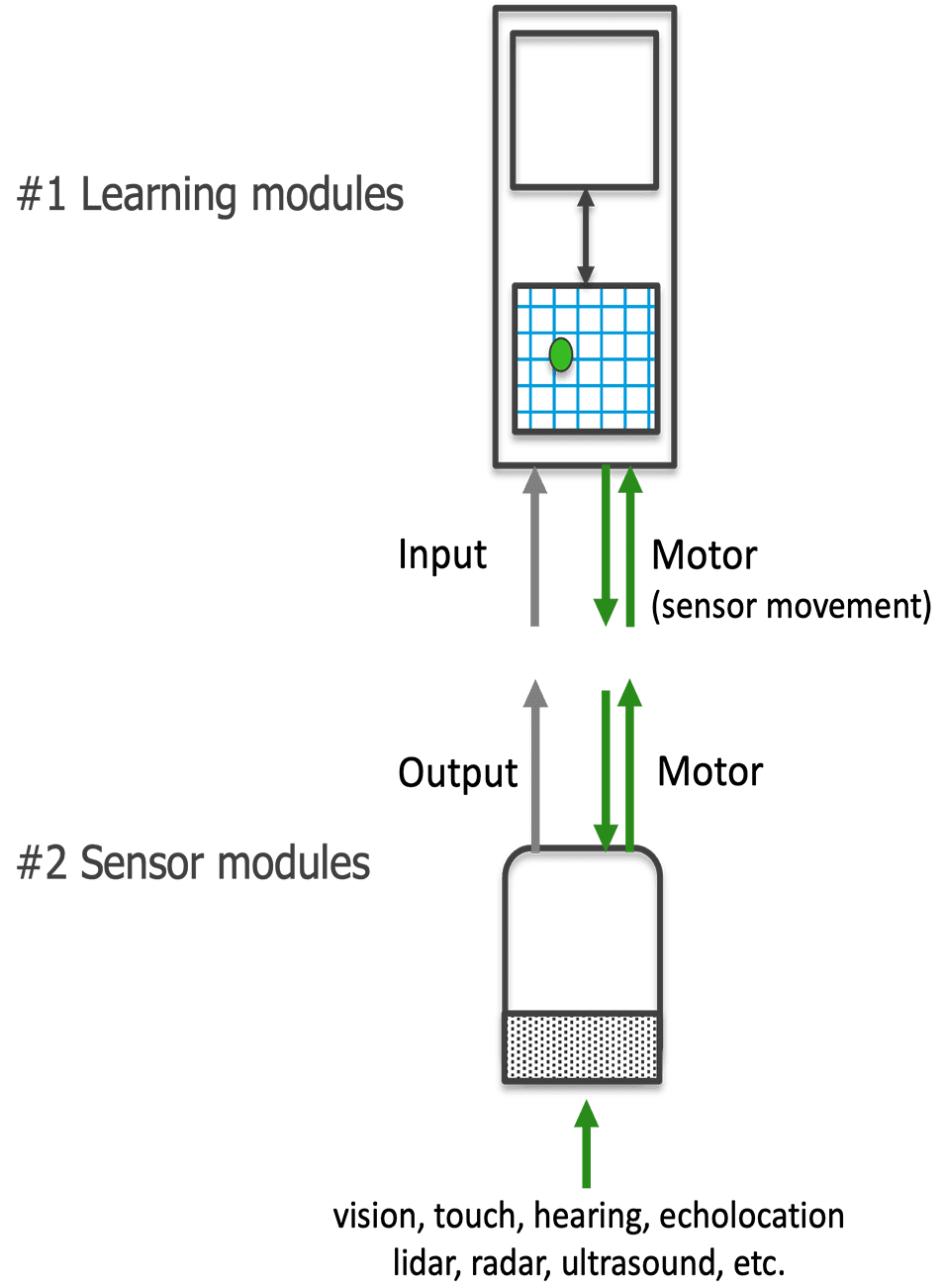

The SDK

In our SDK we will provide a general framework for learning with two different modules: Learning modules (LM) and sensor modules (SM). Learning modules are like cortical columns and learn structured models of the world from sensorimotor data. Sensor modules are the interface between the environment and the system and convert input from a specific modality into the common communication protocol.

An LM does not care from which modality it receives input and multiple LMs receiving input from different modalities can communicate effortlessly. Practitioners can implement custom SMs for their specific sensors and plug them into the system. A system can also use different types of LMs, as long as they all adhere to the common communication protocol. This makes the system extremely general and flexible.

For more details, you can read our documentation here.

Have questions? Join our Discourse!