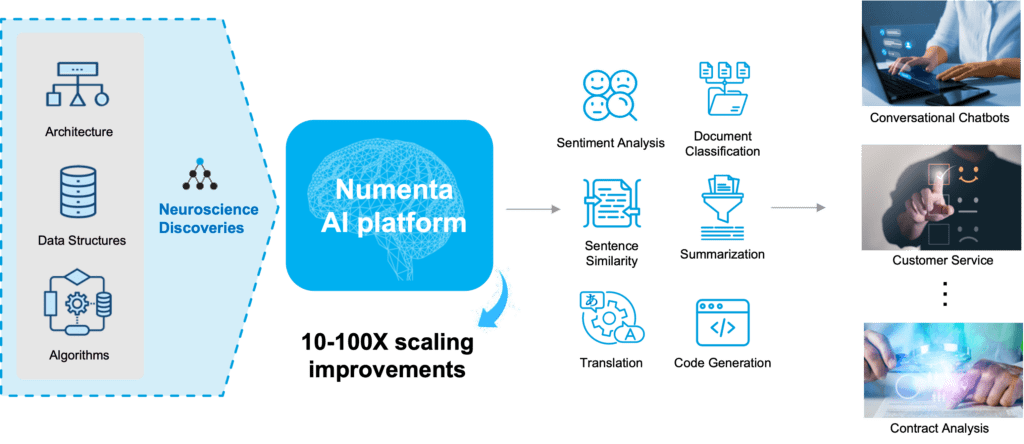

Numenta AI Platform

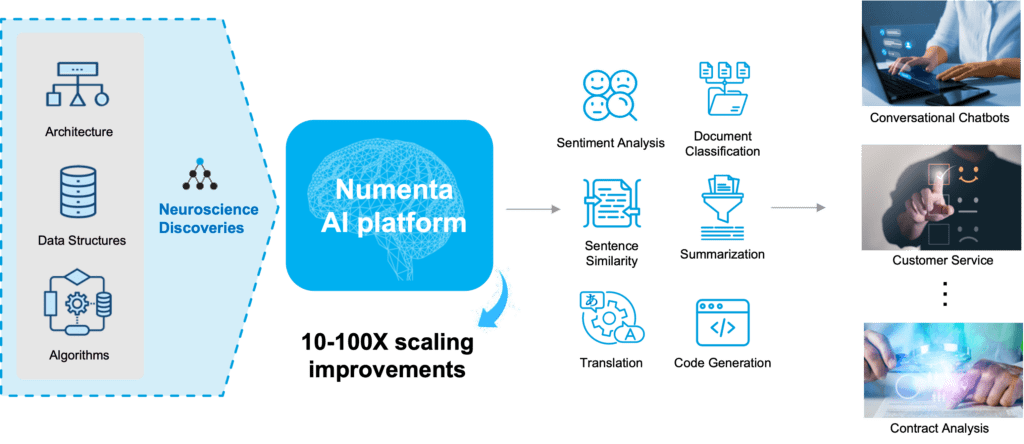

AI technology rooted in neuroscience and run on commodity CPUs

Lightning Speed

Achieve 10 to over 100 times speedup on CPUs without sacrificing accuracy

Power Efficient

Run on CPUs with 5-20X power savings over GPUs

Memory Friendly

Reduce memory usage and simplify memory management systems

Effective Scaling

Easily scale large AI models on CPU-only systems

Boosts AI inference performance on commodity CPUs

Our technology achieves unparalleled speedups and performance improvements on CPUs – BERTs to multi-billion parameter GPTs.

Enables ultimate AI infrastructure efficiency

Simplify infrastructure management by flexibly allocating cores to leverage untapped CPUs, maximize resource utilization and minimize TCO.

Seamlessly integrates with standard workflows

Built on the Triton Server and standard inference protocols, Numenta’s AI platform fits right into existing infrastructure and works with standard MLOps.

Achieves dramatic performance improvements

Numenta Inference Server

Built on the Triton server, our inference server uses industry standard protocols and a simple http-based API, which allows for seamless integration into almost any standard MLOps solution, such as Kubernetes.

When run on CPUs, a single instance of the server can run dozens of different models in parallel without the need for batching or synchronization, providing the ultimate infrastructure flexibility.

Numenta Training Module

Our training module makes it easy to fine-tune a model for a specific task or increase model accuracy for particular domains or use cases. Fine-tuning can be useful for adapting non-generative models with the necessary model heads or performing additional training on a generative model to give more appropriate responses.

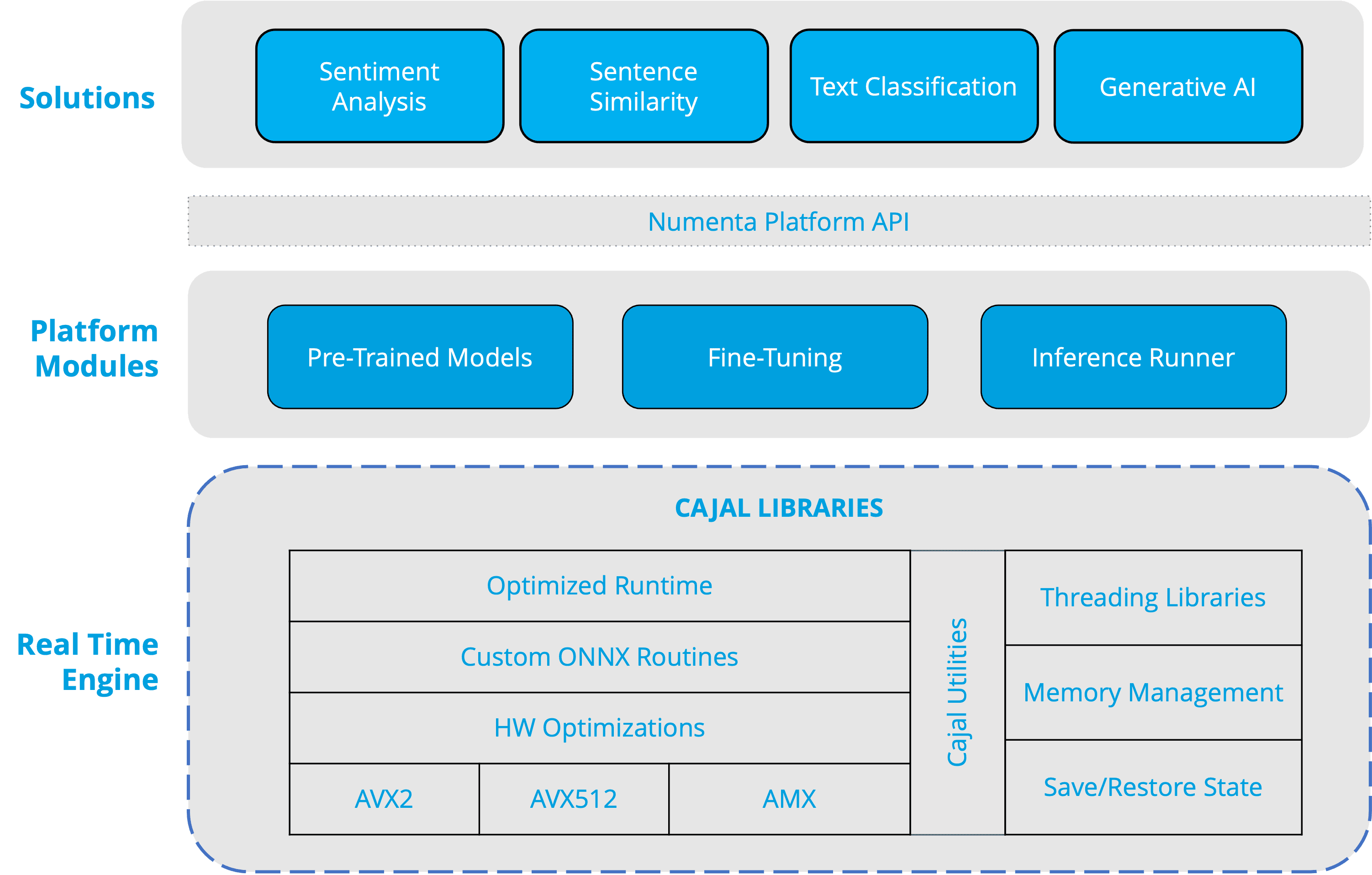

Cajal Libraries

Within our Inference Server is a real-time engine, built using our internal Cajal Libraries (named after the very first neuroscientist Santiago Ramón y Cajal). These libraries, written in C++ and assembler, are designed to minimize data movement, maximize cache usage and optimize memory bandwidth, enabling efficient memory sharing across models.

The Cajal Libraries include an optimized runtime, custom ONNX routines, and a set of hardware optimizations that leverage SIMD instructions (AVX2, AVX512, and AMX) for unparalleled throughput without sacrificing accuracy.

Leverages insights from cortical circuitry, structure and function

Data Structures

Based on how information is represented in the brain, Numenta data structures are highly flexible and versatile, applicable to many different problems in many different domains.

Architecture

Based on biophysical properties of the brain, Numenta’s network architecture dynamically restricts and routes information in a context-specific manner, yielding low-cost solutions for a range of problems.

Algorithms

Based on how information is used in the brain, Numenta algorithms intelligently act on data and adapt as the nature of the problems change.

RESULTS

Dramatically Accelerate Large Language Models on CPUs

Why Numenta

At the Forefront of Deep Learning Innovation

Rooted in deep neuroscience research

Leverage Numenta’s unique neuroscience-based approach to create powerful AI systems

10-100x performance improvements

Reduce model complexity and overhead costs with 10-100x performance improvements

Seamless adaptability and scalability

Discover the perfect blend of flexibility and customization, designed to cater to your business needs

Deploy On-Premise or Via Favorite Cloud Provider

- Full control over models, data and hardware

- Utmost security and privacy

- Low network bandwidth costs

- Integrate into existing hardware with no additional costs

Case Studies

Developing AI-powered games on existing CPU infrastructures without breaking the bank

AI is opening a new frontier for gaming, enabling more immersive and interactive experiences than ever before. NuPIC enables game studios and developers to leverage these AI technologies on existing CPU infrastructure as they embark on building new AI-powered games.

20x inference acceleration for long sequence length tasks on Intel Xeon Max Series CPUs

Numenta technologies running on the Intel 4th Gen Xeon Max Series CPU enables unparalleled performance speedups for longer sequence length tasks.

Numenta + Intel achieve 123x inference performance improvement for BERT Transformers

Numenta technologies combined with the new Advanced Matrix Extensions (Intel AMX) in the 4th Gen Intel Xeon Scalable processors yield breakthrough results.