This month I’m pleased to share several updates with you, including new machine learning podcasts, an interview with our Co-founder Jeff Hawkins, and more.

Subutai Ahmad on This Week in Machine Learning (TWIML)

Subutai Ahmad, our VP Research, was a recent guest on the TWIML AI podcast. In his hour-long discussion with host Sam Charrington, he describes our fundamental neuroscience research that builds the foundation for our machine learning work. They talk about the significance of cortical columns in the brain as well as in neural networks; the importance of sensorimotor models and what it means for a model to have inherent 3-D understanding; and lastly, they discuss sparsity in machine learning – the differences between sparse and dense networks, and how Numenta is implementing sparsity to optimize performance of current deep learning networks.

![]()

![]()

![]()

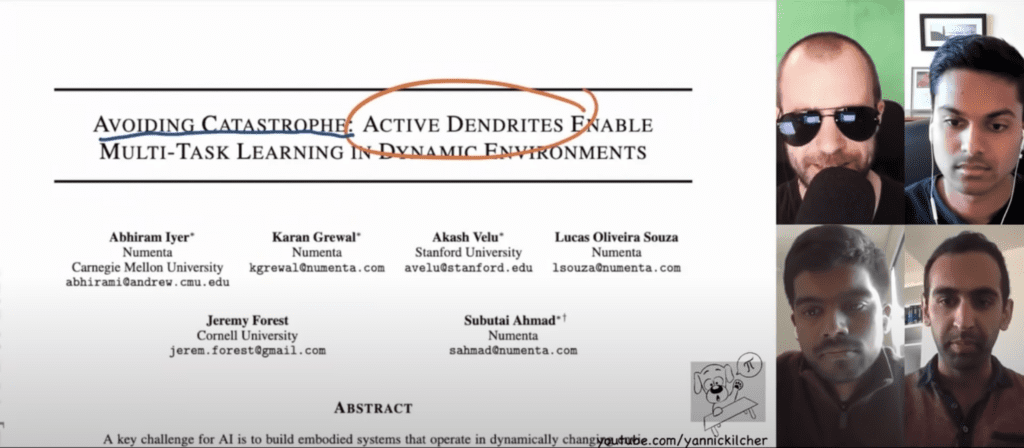

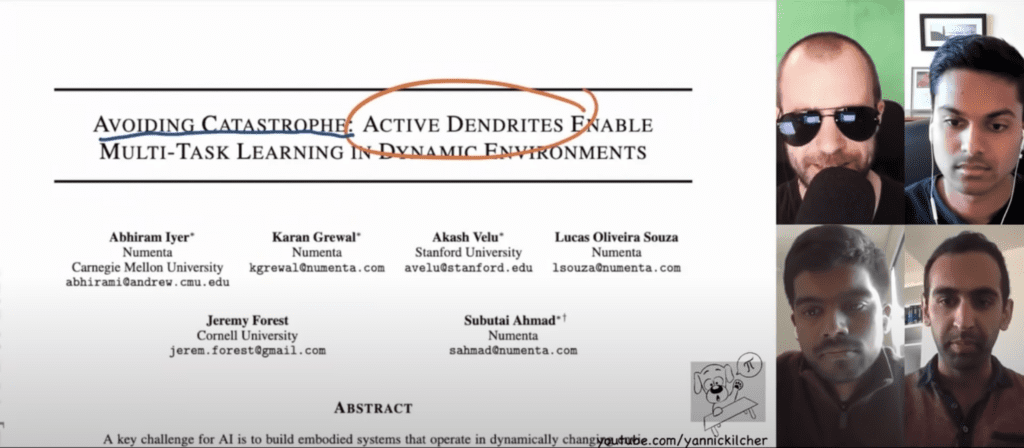

A Deep Dive on Active Dendrites for Multi-task and Continual Learning with Yannic Kilcher

– Yannic Kilcher, AI researcher and content creator

In our last newsletter, I shared our new research paper, Avoiding Catastrophe: Active Dendrites Enable Multi-Task Learning in Dynamic Environments. Yannic Kilcher recently reviewed the paper in a two-part video on his popular ML and AI YouTube channel. In the first video, Yannic gives an overview of the paper before breaking it down for his viewers, reviewing it in depth, and raising some questions. In part two, he discusses the paper with the three first authors: Abhiram Iyer, Karan Grewal, and Akash Velu. They answer Yannic’s questions and introduce new insights.

If you’re interested in learning how neurobiological principles can be applied to deep learning in general, and how active dendrites can enable continual learning specifically, watch both videos.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

The Sequence Chat: Jeff Hawkins

Our co-founder Jeff Hawkins was profiled in The Sequence, a newsletter dedicated to educating its subscribers about artificial intelligence and machine learning. In this interview, Jeff explains how he got started in neuroscience and machine learning; how our theory of intelligence maps to AI; and what he thinks will pave the way for general-purpose AI.

DigitalFutures: Jeff speaks at AI, Neuroscience, and Architecture series

What do AI, Neuroscience and Architecture have in common? Quite a bit, it turns out. Jeff recently spoke at an event for DigitalFUTURES, an online international architectural education platform.

In this 2+ hour fireside chat, Jeff discusses the ideas in his book, A Thousand Brains, and addresses the architectural significance of some of the main concepts, like mapping, memory, and modeling. The thoughtful Q&A underscores how the theory and ideas in the book can apply to many fields.

![]()

![]()

![]()

![]()

![]()

New on the Blog: Complementary Sparsity

In our last newsletter, I highlighted our new research paper, “Two Sparsities Are Better Than One: Unlocking the Performance Benefits of Sparse-Sparse Networks,” which introduces an innovative technology called Complementary Sparsity. Though sparsity is becoming increasingly popular in the field of machine learning, many sparse networks are constrained by existing hardware architecture. When sparsity techniques don’t map well to the hardware, it results in poor performance. Complementary Sparsity is a novel form of sparsity that’s structured to match the requirements of the hardware rather than require hardware to match unstructured sparse networks.

![]()

![]()

Brains@Bay Meetup on Neuromodulators, April 13, 10am PDT

Mark your calendars for our next Brains@Bay Meetup: “Exploring Neuromodulators and How They Might Impact AI.” We’ve lined up three speakers to discuss an important yet often overlooked topic in AI: how neuromodulatory systems enable better and more flexible learning in deep neural networks.

We’ll hear from Jie Mei (The Brain and Mind Institute), Srikanth Ramaswamy (Newcastle University), and Thomas Miconi (ML Collective); then conclude with a discussion panel and Q&A.

![]()

![]()

Thank you for your continued support and interest in Numenta. Follow us on Twitter to make sure you don’t miss any updates.

Christy Maver

VP of Marketing