Abstract:

Dendrites of pyramidal neurons demonstrate a wide range of linear and non-linear active integrative properties. Extensive work has been done to elucidate underlying biophysical mechanisms, but our understanding of the computational contributions of active dendrites remains limited. As such the vast majority of artificial neural networks (ANNs) ignore the structural complexity of biological neurons and use simplified point neurons.

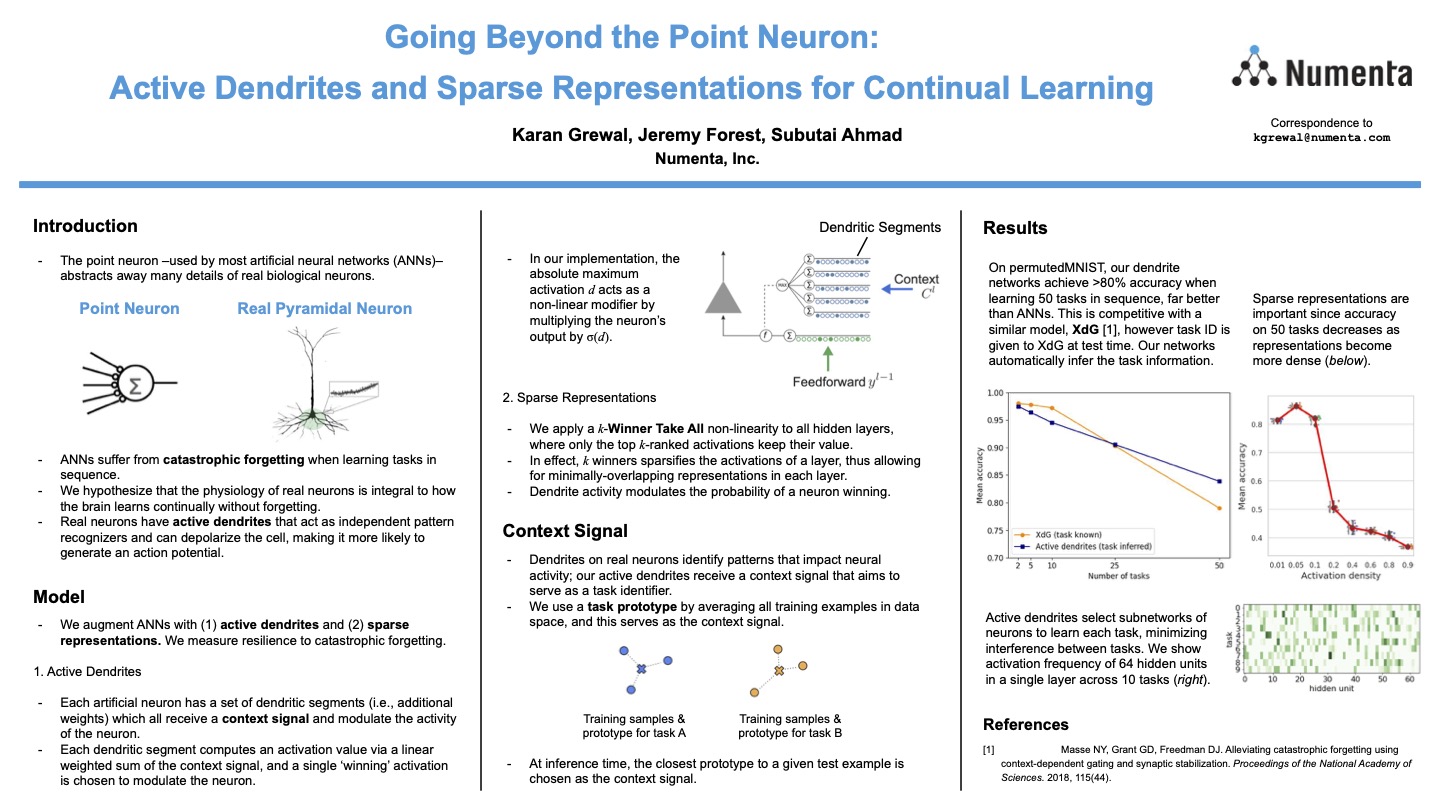

In this paper we propose that active dendrites can help ANNs learn continuously, a property prevalent in biological systems but absent in artificial systems. (Most ANNs today suffer from catastrophic forgetting, i.e. they are unable to learn new information without erasing what they previously learned.) Our model is inspired by two key properties: 1) the biophysics of sustained depolarization following dendritic NMDA spikes, and 2) highly sparse representations. In our model, active dendrites act as a gating mechanism where dendritic segments detect task-specific contextual patterns and modulate the firing probability of postsynaptic cells. A winner-take-all circuit at each level gives preference to up-modulated neurons, and activates a highly sparse subset of neurons. These task-specific subnetworks have minimal overlap with each other, and this in turn minimizes the interference in error signals. As a result, the network does not forget previous tasks as easily as in standard networks without active dendrites.

We compare our model to two others. Dendritic gated networks (DGNs) [1] compute a linear transformation per dendrite followed by gating. DGNs do not learn dendritic weights and model complexity grows with the number of classes. Context-dependent gating (XdG) [2] turns individual units on/off based on task ID. XdG largely avoids catastrophic forgetting but the task ID and hardcoded network subsets are always required. We tested our model in a standard continual learning scenario, permutedMNIST. Instead of hardcoding task ID, we employ a prototype method to infer task-specific context signals. Results show that dendritic segments learn to recognize different context signals and that this in turn leads to the emergence of independent sub-networks per task. In tests, our dendritic networks achieve 94.4% accuracy when learning 10 consecutive permutedMNIST tasks, and 83.9% accuracy for 50 consecutive tasks. This compares favorably with DGNs and XdG, but without their previously mentioned limitations. (Note that standard ANNs fail at this task and only achieve chance accuracy.) In addition, training is simple and requires only standard backpropagation.

Further analysis shows that the sparsity of representations and number of dendrites correlate positively with overall accuracy. Our technique is complementary to other continual learning strategies, such as EWC/Synaptic Intelligence and experience replay, and thus can be combined with them. Our results suggest that incorporating the structural properties of active dendrites and sparse representations can help improve the accuracy of ANNs in a continual learning scenario.

References

- Sezener E, Grabska-Barwinska A, Kostadinov D, et al. A rapid and efficient learning rule for biological neural circuits. BioRxiv preprint. 2021.

- Masse NY, Grant GD, Freedman DJ. Alleviating catastrophic forgetting using context-dependent gating and synaptic stabilization. Proceedings of the National Academy of Sciences. 2018, 115(44).