Numenta + Intel achieve 123x inference performance improvement for BERT Transformers

Intel recently released the new 4th Generation Intel Xeon Scalable Processor (formerly codenamed Sapphire Rapids). Read our press release for more details on our collaboration with Intel in accelerating inference performance for large language models.

challenge

Meeting the high-throughput, low-latency demands of real-time NLP applications

For real-time Natural Language Processing (NLP) applications, high throughput, low latency technologies are a requirement. While Transformers have become fundamental within NLP, their size and complexity have made it nearly impossible to meet the rigorous performance demands cost effectively. An example application requiring both low latency and high throughput is Conversational AI, one of the fastest growing AI and NLP markets, expected to reach $40 bn by 2030.

SOLUTION

Numenta’s optimized models plus Intel’s latest Xeon Scalable Processor unlock performance gains

In collaboration with Intel, we were able to combine our proprietary, neuroscience-based technology for dramatically accelerating Transformer networks with Intel’s new Advanced Matrix Extensions (Intel AMX) available in the 4th Gen Intel Xeon Scalable processors (formerly codenamed Sapphire Rapids). This synergistic combination of algorithm advances and hardware advances led to unparalleled performance gains for BERT inference on short text sequences.

RESULTS

123x throughput performance improvement and sub-9ms latencies for BERT-Large Transformers

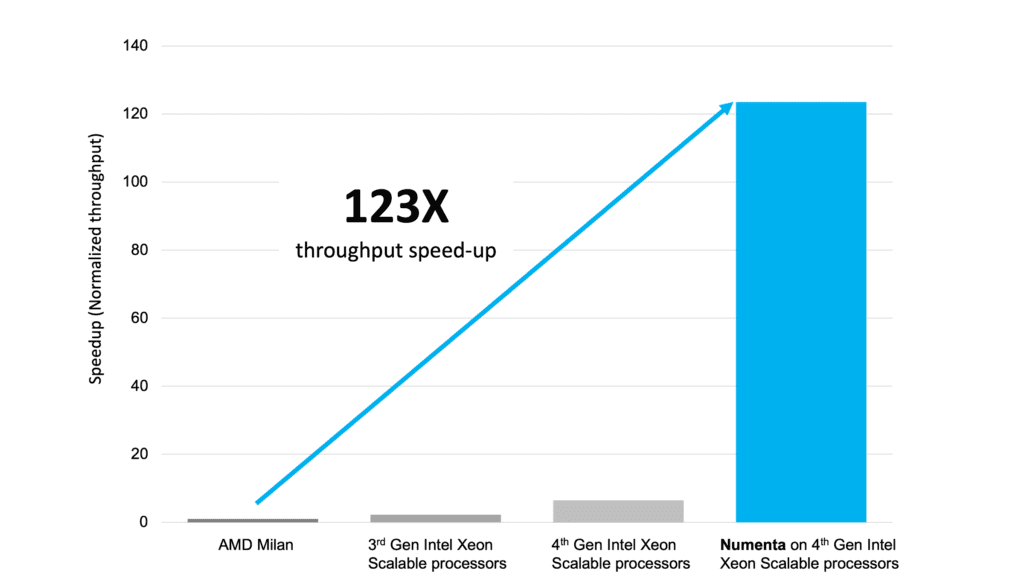

We integrated our technology into Intel’s OpenVINO toolkit and compared the inference performance of Numenta ‘s BERT-Large model on the 4th generation 56-core Intel Xeon Scalable Processor with performance of traditional ONNX BERT-Large SQuAD models on a variety of processors. The chart illustrates the throughput improvements achieved when comparing Numenta’s BERT model on the new Intel processor with an equivalent traditional BERT-Large model running on 48-core AMD Milan, 32-core Intel 3rd generation, and 56-core Intel 4th generation Xeon processors, with batch size of 1.

In this example, we optimized for latency, imposing a 10ms restriction that’s often used for real-time applications. Numenta’s BERT-Large model on Intel’s 4th generation Xeon processor was the only combination able to achieve the sub-10ms latency threshold. These results illustrate a highly scalable cost-effective option for running the large deep learning models necessary for Conversational AI and other real-time AI applications.

BENEFITS

Breakthrough performance gains open new possibilities for real-time NLP and AI

The technology synergies between Numenta and Intel have turned Transformers from costly and complex to a highly-performant, cost-effective, ideal solution for real-time NLP industries like Conversational AI.

- Industry-leading performance breakthroughs for Transformer networks, with 123x throughput speed-up while maintaining sub-9ms latencies

- Cost-effective options for running the large deep learning models necessary for AI and NLP applications

- New possibilities for many time-sensitive AI applications that can finally deploy Transformer models in production

ADDITIONAL RESOURCES

Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries.

Ready to supercharge your AI solutions with NuPIC?

Related Case Studies

Developing AI-powered games on existing CPU infrastructures without breaking the bank

AI is opening a new frontier for gaming, enabling more immersive and interactive experiences than ever before. NuPIC enables game studios and developers to leverage these AI technologies on existing CPU infrastructure as they embark on building new AI-powered games.

20x inference acceleration for long sequence length tasks on Intel Xeon Max Series CPUs

Numenta technologies running on the Intel 4th Gen Xeon Max Series CPU enables unparalleled performance speedups for longer sequence length tasks.

Numenta + Intel achieve 123x inference performance improvement for BERT Transformers

Numenta technologies combined with the new Advanced Matrix Extensions (Intel AMX) in the 4th Gen Intel Xeon Scalable processors yield breakthrough results.