This spring, we hosted a Brains@Bay meetup on Exploring Neuromodulators and How They Might Impact AI. The three-hour-long meetup featured Srikanth Ramaswamy, the principal investigator of Neural Circuits Laboratory from Newcastle University; Jie Mei, a Postdoc from the Brain and Mind Institute at the University of Ontario; and Thomas Miconi, a member of ML Collective. Each speaker gave a presentation followed by a discussion panel led by our VP of Research and Engineering Subutai Ahmad.

We always look forward to hearing from our invited guests at these meetups, but particularly so for this one because the subject is an important yet often overlooked topic in AI. Our speakers did not disappoint, and the meetup was jam-packed with insights on how neuromodulators can lead to more flexible and adaptive AI. I encourage you to watch the replay for the full deep dive, but in this blog, I’ll highlight the top takeaways:

The Neuroscience of Neuromodulators

1. Neuromodulators enable diverse spatial and temporal functional effects across the brain

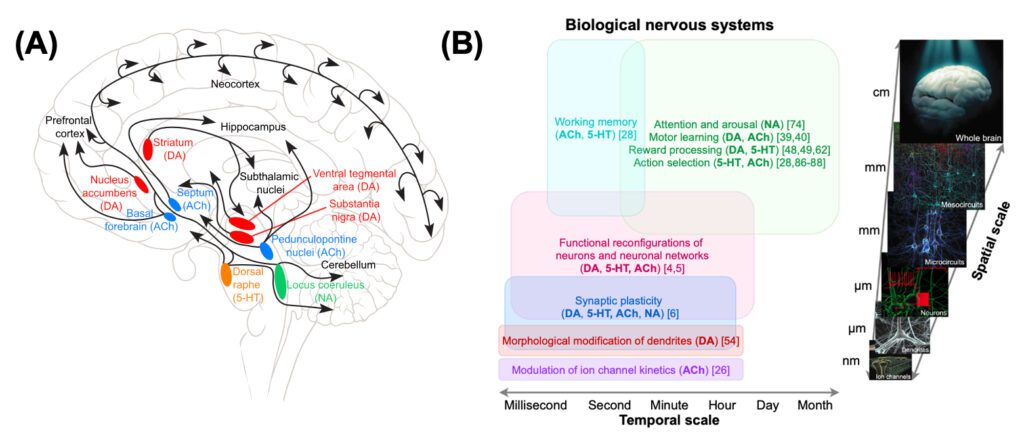

Neuromodulators, such as dopamine and serotonin, are signaling chemicals that are distributed across different regions in the neocortex. In Prof. Srikanth Ramaswamy’s talk ‘A Primer on Neuromodulatory Systems’, he said that neuromodulators “dynamically reconfigure or change activity states of neurons and synapses, to bring about millions of possibilities at the network level.” As such, neuromodulators are key mechanisms that enable dynamic and autonomous decision-making in mammals.

Biological nervous systems learn through observing and interacting with the world around them. Srikanth showed that there are a diverse set of neuromodulatory systems that emerge from and target different brain regions and project from the subcortical regions to the neocortex. These neuromodulators bind with different receptors that are distributed across neurons, synapses, and microcircuits, which can lead to varied multiscale functional effects. Srikanth also stated that depending on its binding receptor and location, the same neuromodulatory system can have differential effects. Similarly, the same neuron can also be targeted by multiple neuromodulators, and the effects can vary greatly depending on the type of neuromodulator and the receptor it is combined with.

In Dr. Jie Mei’s talk ‘Implementing multi-scale neuromodulators in artificial neural networks”, she gave examples of the spatial and temporal functional effects of these systems. For instance, Acetylcholine (ACh) is a neuromodulator that modulates ion channel kinetics while Dopamine (DA) plays a key role in motor learning and reward-based learning, and reconfigures neuronal networks across different time periods.

Figure 1: (A) Srikanth showed that a diverse number of neuromodulatory systems are projected across different brain regions. (B) Mei gave examples of the spatial and temporal functional effects of neuromodulatory systems. (Mei et al., 2022, Trends in Neurosciences)

Figure 1: (A) Srikanth showed that a diverse number of neuromodulatory systems are projected across different brain regions. (B) Mei gave examples of the spatial and temporal functional effects of neuromodulatory systems. (Mei et al., 2022, Trends in Neurosciences)

Both Srikanth and Mei emphasized that the spatiotemporal combination of neuromodulatory function is what makes biological neural networks adaptive to environmental contexts.

2. Interactions between neuromodulators are incredibly important

The brain contains a large diversity of neurons that constantly interact and communicate with each other through synapses. The same goes for neuromodulators. Srikanth expressed that neuromodulators never act in isolation. They always function in cooperation or opposition to bring changes in brain states.

The multi-scale effects of neuromodulators from different areas of the brain ultimately come together and act at the network level to regulate cortical states. Since the activities of neurons are constantly changing, Srikanth described the interaction of neuromodulatory systems as “a relay race, where one neuromodulator sets the stage for another neuromodulator to take over.” These interactions bring about different brain states, which give us the flexibility to learn, plan and take actions.

Applying Neuromodulatory Principles to AI

1. A framework for incorporating neuromodulators in deep learning systems

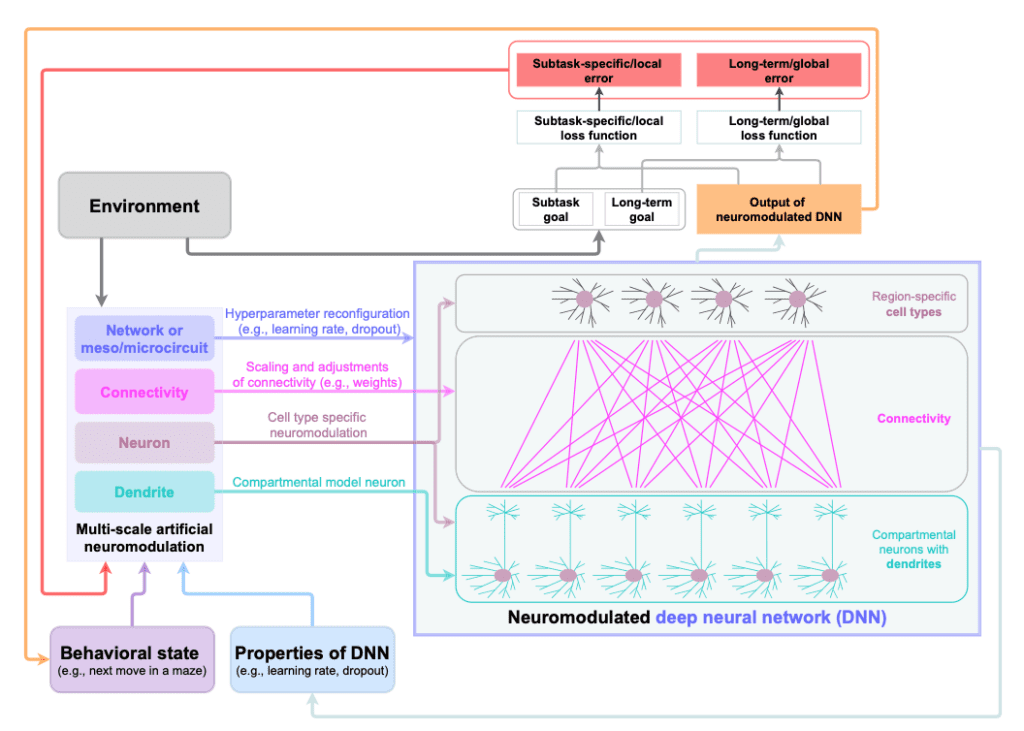

Based on the neuromodulatory principles of biological nervous systems, in their paper and their talk, Srikanth and Mei proposed the following framework for incorporating neuromodulators into deep neural networks:

Figure 2: A framework for integrating multiscale neuromodulatory systems into deep neural networks. Neuromodulatory effects are represented at four different spatial scales. (Mei et al., 2022, Trends in Neurosciences)

Figure 2: A framework for integrating multiscale neuromodulatory systems into deep neural networks. Neuromodulatory effects are represented at four different spatial scales. (Mei et al., 2022, Trends in Neurosciences)

Mei outlined a multi-scale artificial neuromodulation unit that is divided into different spatial and temporal scales. At each level, the neuromodulators have different effects such as adjusting network hyperparameters, scaling and adjusting connectivity with plasticity, modulating specific neurons and reconfiguring dendritic computation properties.

Mei then showed that incorporating a neuromodulatory model in deep neural networks can achieve many behavioral benefits:

- Improve model performance: agents obtain higher rewards and learn faster and better when introduced to a neuromodulatory input that regulates plasticity.

- Learn adaptive behavior: agents are more adaptive and robust.

- Alleviate catastrophic forgetting: a modularity-based neural network improves learning and helps with learning new tasks without forgetting old ones.

- Enable state-dependent behavior: local neuromodulatory systems allow the network to learn more patterns and create distinct memory regions depending on context.

2. Neuromodulatory-based networks can learn novel tasks faster

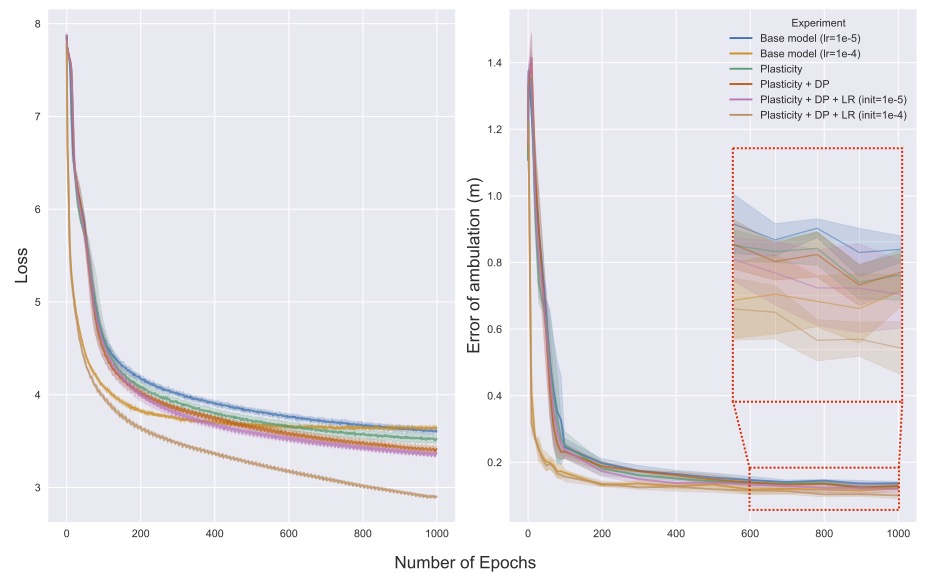

In the proposed framework, Mei showed that in her own studies, incorporating a neuromodulatory model in varying spatial scales of the neural network, namely at single unit, layer and whole network levels, can lead to “significantly faster learning.” She introduced her team’s work at the lab where they tasked a network to navigate in an open field. As different neuromodulatory components are added to different levels of the network, Mei said they have “seen continuous improvement” in model learning as indicated by loss and error of ambulation during model training.

Figure 3: Effects of multi-scale neuromodulation on model loss and error of ambulation. Mei noted that neuromodulatory processes at network and connectivity levels learn marginally then significantly faster.

Figure 3: Effects of multi-scale neuromodulation on model loss and error of ambulation. Mei noted that neuromodulatory processes at network and connectivity levels learn marginally then significantly faster.

She noted that rapid learning occurs during the beginning trials and after 200 passes (i.e. epochs), a greater difference can be seen quantified by the error of ambulation between the base model and neuromodulatory models. For instance, the error of ambulation the base model achieved at 500 epochs was comparable to the error achieved by one of the neuromodulatory models at a learning rate of <150 epochs.

Mei further highlighted that a significant portion of the learning occurs at the beginning and faster evolution of cell patterns can be observed. At the end of the model training, Mei said a clear pattern of cell types, such as grid cells and place cells, emerges. In Mei and her team’s ongoing studies, they have further observed neuromodulation-induced changes in cell activity patterns in properties.

3. Neuromodulatory-based networks can regulate plasticity and allow networks to automatically learn novel tasks

An important function of neuromodulators is their role in synaptic plasticity as they are able to vastly regulate the window of spike time that regulates plasticity.

In Dr. Thomas Miconi’s talk ‘How to Evolve Your Own Lab Rat‘, he introduced a network that incorporated a model of dopamine-modulated synaptic plasticity. Dopamine is a neuromodulator that is well known for responding to rewards and a key role in motivation by modifying the brain’s synaptic plasticity. There is also emerging evidence that dopamine can modulate learning and memory.

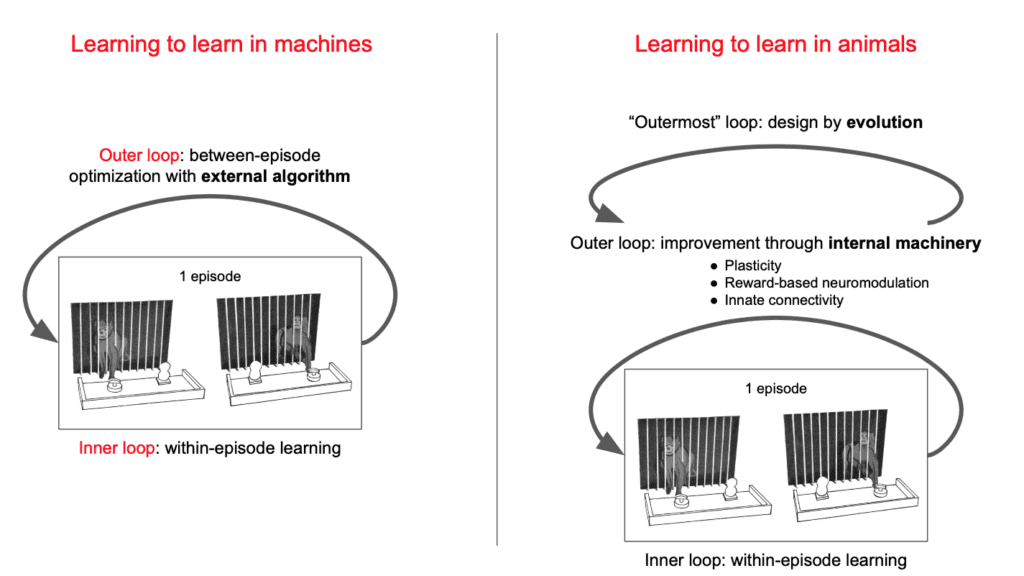

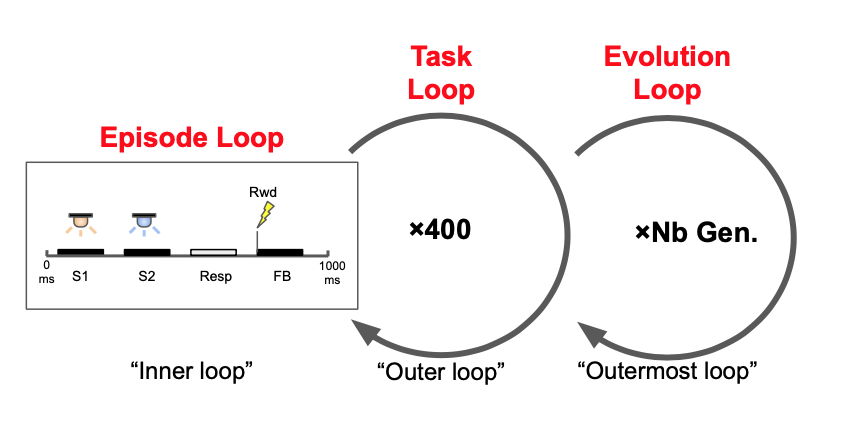

Mammals can quickly identify tasks after a few exposures to stimuli and rewards, and Thomas noted that this cognitive ability of “learning how to learn” is similar to the concept of meta-learning in deep learning. Thomas explained that in meta-learning, learning is usually split into two loops. The learning agent is first “shown two stimuli in succession with delays, and produces a response that receives a certain reward which affects the neuromodulation” of the model. When the agent performs a task a few times within that “episode”, the “inner loop” stores the information the agent has learned within the episode, such as associating the stimuli and reward.

Figure 4: Thomas showed that machines use an external algorithm to optimize between episodes, while animals improve through internal machinery and evolution.

Between episodes, there is an “outer loop” of learning during which an external algorithm optimizes the agent, so it gets better when it performs tasks in the inner loop. However, when we look at biological agents, optimizing in the outer loop does not occur through an external algorithm. It is not biologically feasible. For example, backpropagation does not occur in the brain. On the contrary, the brain has self-modifying abilities based on plasticity, reward-based neuromodulation, and its innate connectivity. Hence, in Thomas’ study, he applied a neuromodulatory plasticity model to the network to optimize the outer loop internally.

Then, Thomas introduced a third, “outermost learning loop,” which incorporates a model of evolution. Evolution optimizes the plasticity and innate connectivity of the network, so that it gets better at learning new tasks, as described above. Similar to what we see in biological nervous systems, on top of optimizing between the outer and inner learning loops, Thomas showed that as time goes on, the artificial agent goes through an evolutionary optimization. This optimization allows the agent to “acquire task-learning abilities and manages to learn the task pretty well even though it has never seen it before”. The neuromodulatory models involved allow the agent to automatically learn the task and get better and better, simply through exposure to stimuli and reward alone.

Figure 5: Meta-meta learning allows the agent to automatically learn the task internally simply through exposure to stimuli and reward alone. (Miconi, 2021, arXiv)

Conclusion

Biological neuromodulatory systems have numerous spatial and temporal effects on all cortical regions and they provide insight into how we are able to learn and adapt quickly and flexibly. As research in this field continues, we hope to see more studies showing the impact of neuromodulators at various scales. The brain is far more intelligent than any deep learning system out there due to its flexibility and efficiency. It provides the blueprint for creating general-purpose machines.

The framework Srikanth and Mei proposed identifies the biological details required to build neuromodulatory-based deep neural networks. And Thomas demonstrated that a neuromodulatory-based network is capable of automatically learning novel cognitive tasks. Incorporating neuromodulatory principles in deep neural networks has tremendous potential to capture aspects of human intelligence that existing networks have failed to do.

Check out the full video recording for more. Thanks to our speakers and members for joining us and Subutai for hosting a great discussion! If you have any questions for the authors, you can reach out via Twitter @neuro_Mei, @srikipedia and @ThomasMiconi

About Brains@Bay

Brains@Bay is a meetup hosted by Numenta with the goal of answering the overarching question, “what new lessons from the brain can help us move beyond the limitations of machine learning?”

At each meetup, we bring together experts and practitioners at the intersection of neuroscience and AI, and explore topics such as sensorimotor learning, the role of grid cells, alternatives to backpropagation, and more. All our meetups are recorded and available here.

If you have comments, questions, or ideas for future talks, feel free to contact me at clai@numenta.com. If you’re interested in joining the next event, join our Brains@Bay community or follow us on for updates.

Citations

Mei, Muller and Ramaswamy. Informing deep neural networks by multiscale principles of neuromodulatory systems, Trends in Neurosciences, 2022.

Thomas Miconi. Learning to acquire novel cognitive tasks with evolution, plasticity and meta-meta-learning, arXiv, 2021.