Advancing deep learning and AI with brain-based technology

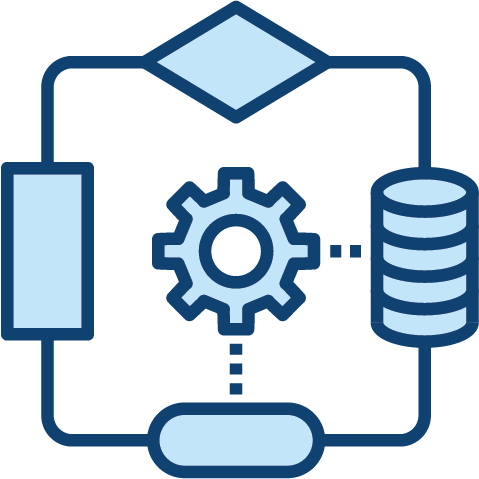

Numenta Platform for Intelligent Computing (NuPIC)

Inference Server

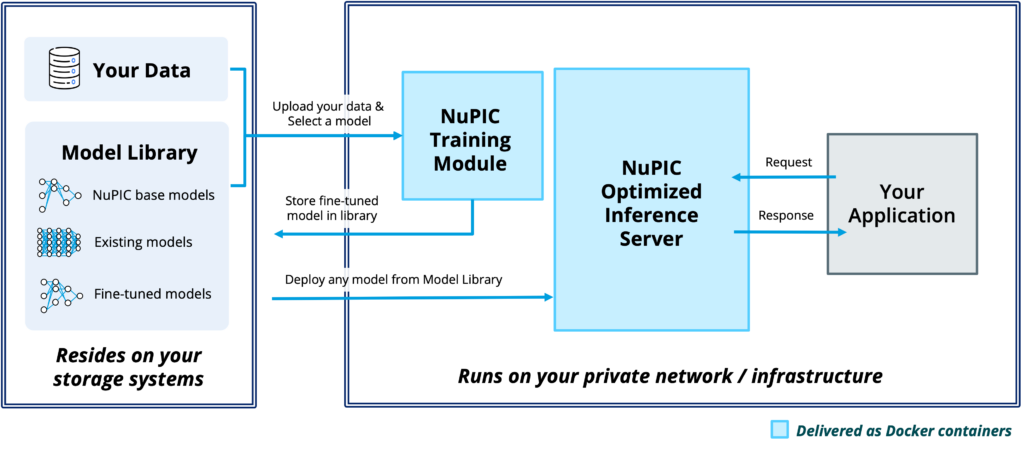

The heart of NuPIC is our optimized inference server. This is the component that contains our hand-optimized AMX code, and our optimized libraries for running transformer networks efficiently on Intel CPUs.

The NuPIC Inference Server uses industry standard protocols and includes a simple http-based API for accessing models and performing inference. It is deployed using the industry standard Triton server, making NuPIC easy to integrate into almost any standard MLOps solution, such as Kubernetes. Because the Inference Server is CPU based, a single instance of the server can run dozens of different models in parallel. Each model runs in a separate process and is completely asynchronous. No batching or synchronization is required to achieve maximal throughput. In fact, you can mix and match generative and non-generative models within the same instance!

Training Module

NuPIC also includes a training module, which enables you to improve the accuracy of your models using your data. You can choose one of the base models in the model library, upload your data to the training module, and fine-tune the model based on your data. This makes the model more specific to your application and typically improves accuracy significantly. The fine-tuned models are then stored back into the library. Any model, including the NuPIC base models, and the fine-tuned models can be easily deployed using the NuPIC Inference Server.

Delivered via Docker

NuPIC components are delivered as Docker containers. You can install the inference server on any Intel compatible server in your infrastructure, private cloud, or even on your laptop. Similarly the training module can be installed easily in your infrastructure.

Runs in your private network

NuPIC runs completely within your private network and corporate infrastructure. Your data and the models reside completely on your private storage systems. You have complete control over the models and when they are updated, your data and data governance policies.

Python-Based Examples

Ease of use was a key design consideration. NuPIC comes with a set of python-based example applications that showcase how easy it is to build NLP based applications on top of the platform. The examples show how to run inference and fine-tuning.

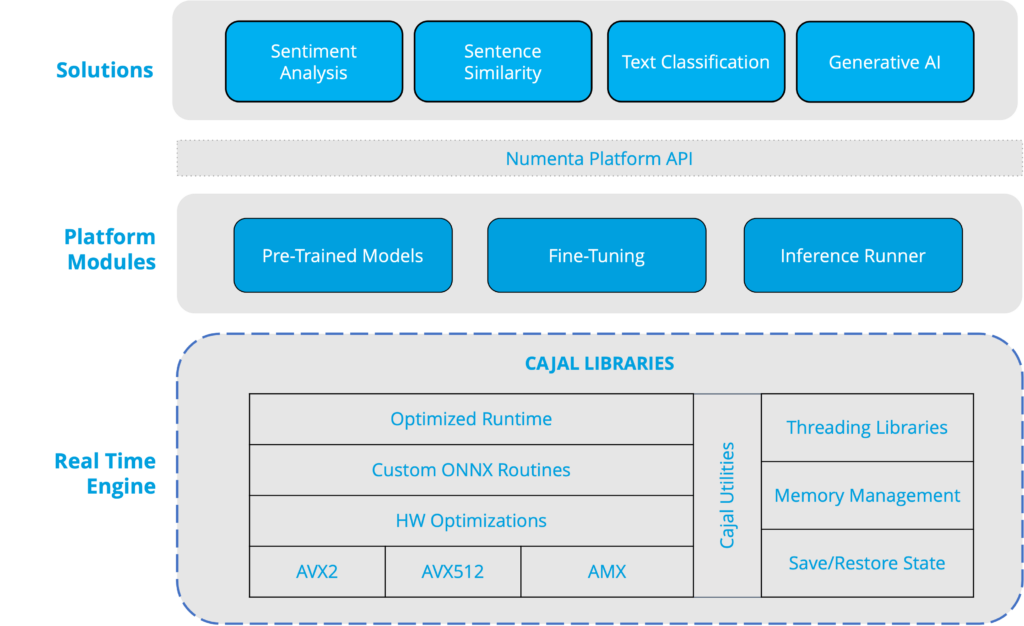

Cajal Libraries

Within the NuPIC Inference Server is the NuPIC real-time engine, built using our internal Cajal libraries (named after the very first neuroscientist Santiago Ramón y Cajal). The Cajal libraries are written using a combination of C++ and assembler. They include an optimized runtime, custom ONNX routines, and a set of hardware optimizations that leverage SIMD instructions, specifically AVX2, AVX512, and AMX.

The design of Cajal enables NuPIC to realize unparalleled throughput. Cajal attempts to minimize data movement and memory bandwidth. Memory management routines maximize the use of L1 and L2 cache and share memory between models as appropriate. Internally, our AMX codebase uses the bf16 format which optimizes the bandwidth required for matrix calculations without compromising accuracy, a common problem with more aggressive quantization and lower precision formats. No specific quantization step is required to use models within NuPIC.

Leveraging insights from cortical circuitry, structure and function

Data Structures

Based on how information is represented in the brain, Numenta data structures are highly flexible and versatile, applicable to many different problems in many different domains.

Architecture

Based on biophysical properties of the brain, Numenta’s network architecture dynamically restricts and routes information in a context-specific manner, yielding low-cost solutions for a range of problems.

Algorithms

Based on how information is used in the brain, Numenta algorithms intelligently act on data and adapt as the nature of the problems change.